Rephrase and rearrange the whole content into a news article. I want you to respond only in language English. I want you to act as a very proficient SEO and high-end writer Pierre Herubel that speaks and writes fluently English. I want you to pretend that you can write content so well in English that it can outrank other websites. Make sure there is zero plagiarism.:

A new advancement in AI technology is offering the potential to revolutionize the way we capture and edit real-life objects in 3D.

Researchers at Simon Fraser University (SFU) in Canada have introduced a technique called Proximity Attention Point Rendering (PAPR), which transforms 2D photos into editable 3D models.

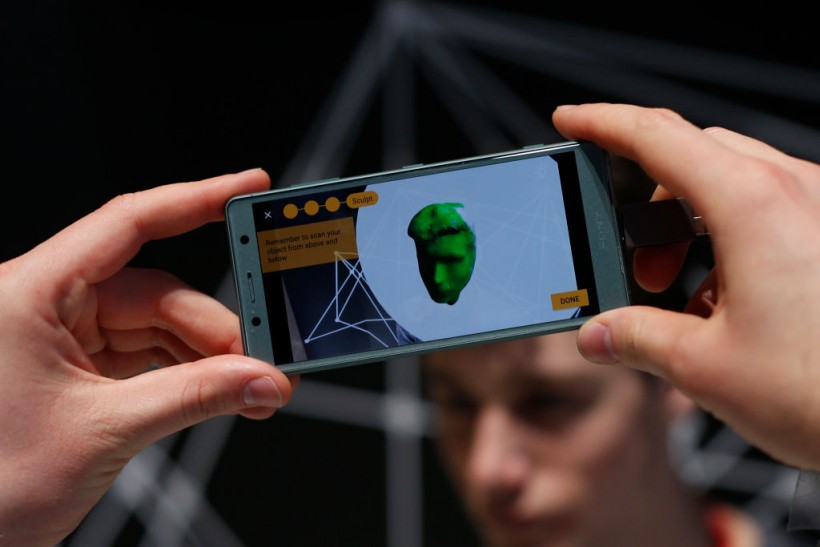

Rather than relying solely on traditional 2D photography, users may soon be able to create intricate 3D captures of objects with their smartphones.

(Photo : PAU BARRENA/AFP via Getty Images)

The PAPR Technology

The PAPR technology showcased SFU researchers at the 2023 Conference on Neural Information Processing Systems (NeurIPS) takes a series of 2D photos of an object and then converts them into a cloud of 3D points, effectively representing the object’s shape and appearance.

Dr. Ke Li, an assistant professor of computer science at SFU and the paper’s senior author, highlights the significant impact of AI and machine learning on reshaping the process of reconstructing 3D objects from 2D images.

The success of these technologies in fields like computer vision has inspired researchers to explore new avenues for leveraging deep learning in 3D graphics pipelines.

The challenge lies in finding a method to represent 3D shapes that allows for intuitive editing. Previous approaches, such as neural radiance fields (NeRFs) and 3D Gaussian splatting (3DGS), have limitations when it comes to shape editing, according to the researchers.

The breakthrough came after the development of a machine-learning model capable of learning an interpolator using a novel mechanism called proximity attention.

This approach allows each 3D point in the point cloud to act as a control point in a continuous interpolator, facilitating intuitive shape editing. Users can easily manipulate the object adjusting individual points and altering its shape and appearance.

Furthermore, the rendered 3D point cloud can be viewed from various angles and converted into 2D photos, providing a realistic view of the edited object.

Read Also: Anthropic Unveils Claude 3: The ‘Rolls-Royce of AI Models’ Outshining GPT-4 and Gemini 1.0 Ultra

AI-Driven Paradigm Shift

“AI and machine learning are really driving a paradigm shift in the reconstruction of 3D objects from 2D images. The remarkable success of machine learning in areas like computer vision and natural language is inspiring researchers to investigate how traditional 3D graphics pipelines can be re-engineered with the same deep learning-based building blocks that were responsible for the runaway AI success stories of late,” said Li.

“It turns out that doing so successfully is a lot harder than we anticipated and requires overcoming several technical challenges. What excites me the most is the many possibilities this brings for consumer technology-3D may become as common a medium for visual communication and expression as 2D is today,” he added.

The findings of the study were published in arXiv.

Related Article: Microsoft and Mistral AI’s Multi-Million Euro Partnership to Reshape AI Landscape, New Challenger to OpenAI’s Dominance

ⓒ 2024 TECHTIMES.com All rights reserved. Do not reproduce without permission.