Rephrase and rearrange the whole content into a news article. I want you to respond only in language English. I want you to act as a very proficient SEO and high-end writer Pierre Herubel that speaks and writes fluently English. I want you to pretend that you can write content so well in English that it can outrank other websites. Make sure there is zero plagiarism.:

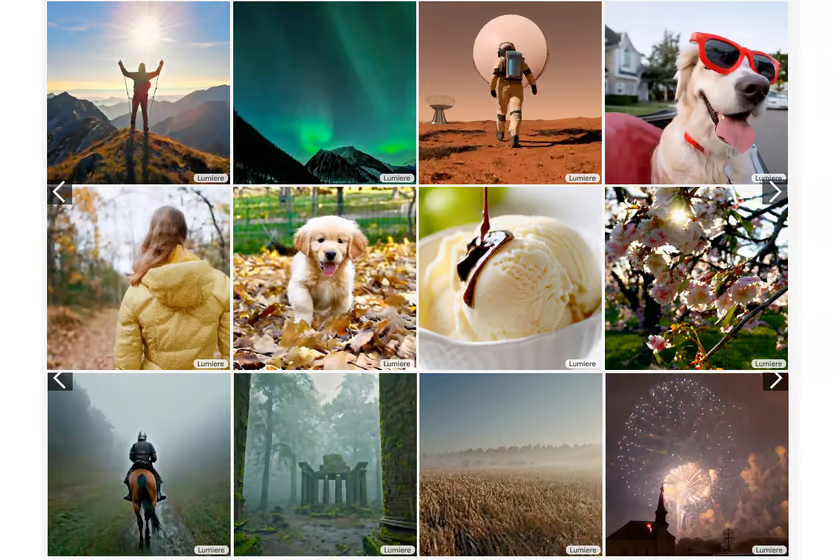

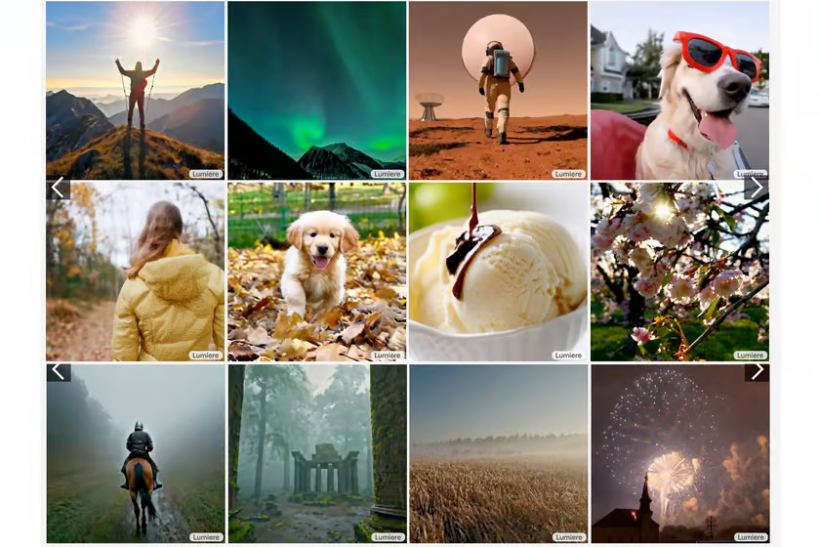

Google has unveiled a cutting-edge text-to-video artificial intelligence (AI) generator called “Lumiere” that will revolutionize the synthesis of realistic and diverse motion in videos.

The project, developed Google Research, introduces a groundbreaking Space-Time U-Net architecture designed to generate the complete temporal duration of a video in a single model pass.

“We introduce Lumiere — a text-to-video diffusion model designed for synthesizing videos that portray realistic, diverse and coherent motion — a pivotal challenge in video synthesis. To this end, we introduce a Space-Time U-Net architecture that generates the entire temporal duration of the video at once, through a single pass in the model,” the Google research team wrote in their paper.

Google Unveils Lumiere

Unlike existing video models that create distant keyframes followed temporal super-resolution, Lumiere adopts a unique approach, making global temporal consistency more attainable.

The architecture incorporates spatial and temporal down- and up-sampling coupled with a pre-trained text-to-image diffusion model. This allows Lumiere to directly produce a full-frame-rate, low-resolution video processing it at multiple space-time scales.

The text-to-video generation framework presented by Google Research represents a significant leap in video synthesis. By utilizing a pre-trained text-to-image diffusion model and addressing inherent limitations in existing methods, Lumiere showcases state-of-the-art text-to-video generation results.

The innovative space-time U-Net architecture enables the generation of full-frame-rate video clips with applications ranging from image-to-video and video inpainting to stylized content generation.

T2I Model

The study acknowledges limitations, specifying that Lumiere is not designed to generate videos with multiple shots or scenes involving transitions. According to the Google team, this aspect remains an open challenge for future research.

Additionally, the model is built on a text-to-image (T2I) model operating in the pixel space, necessitating a spatial super-resolution module for high-resolution image production.

Despite these limitations, Lumiere’s design principles hold promise for latent video diffusion models, sparking potential avenues for further exploration in text-to-video model development.

The primary objective of Lumiere is to empower novice users to create visual content creatively and flexibly. However, the researchers acknowledge the potential for misuse, emphasizing the importance of developing tools to detect biases and prevent malicious use cases.

Ensuring safe and fair use of this technology is deemed crucial, underscoring Google’s commitment to responsible AI development. In summary, Google’s Lumiere represents a breakthrough in text-to-video AI generation, offering a novel approach to synthesizing realistic and coherent motion in videos.

The innovative architecture and design principles showcased in this project set the stage for advancements in video synthesis technology, focusing on usability and responsible implementation.

“Our primary goal in this work is to enable novice users to generate visual content in an creative and flexible way. However, there is a risk of misuse for creating fake or harmful content with our technology, and we believe that it is crucial to develop and apply tools for detecting biases and malicious use cases in order to ensure a safe and fair use,” the researchers concluded.

Related Article: Verily Rumored to Leave Alphabet, Google to be Independent; Health Tech, Research to Be its Focus

ⓒ 2023 TECHTIMES.com All rights reserved. Do not reproduce without permission.